Making AI-Powered Mutation Testing Reliable and Fair

4 Jun 2025

Mitigating validity threats in LLM-based mutation testing by using diverse datasets, models, and rigorous validation methods for reliable results.

How Large Language Models Improve Mutation Testing—and What Still Needs Work

4 Jun 2025

Study reveals LLMs’ potential in generating realistic mutations for testing, highlights prompt design, and points to challenges in compilable code generation.

Sensitivity Analysis of Experiment Parameters in Bug Mutation Studies

4 Jun 2025

This study analyzes how context length, few-shot examples, and mutation counts impact the accuracy and consistency of bug mutation testing results.

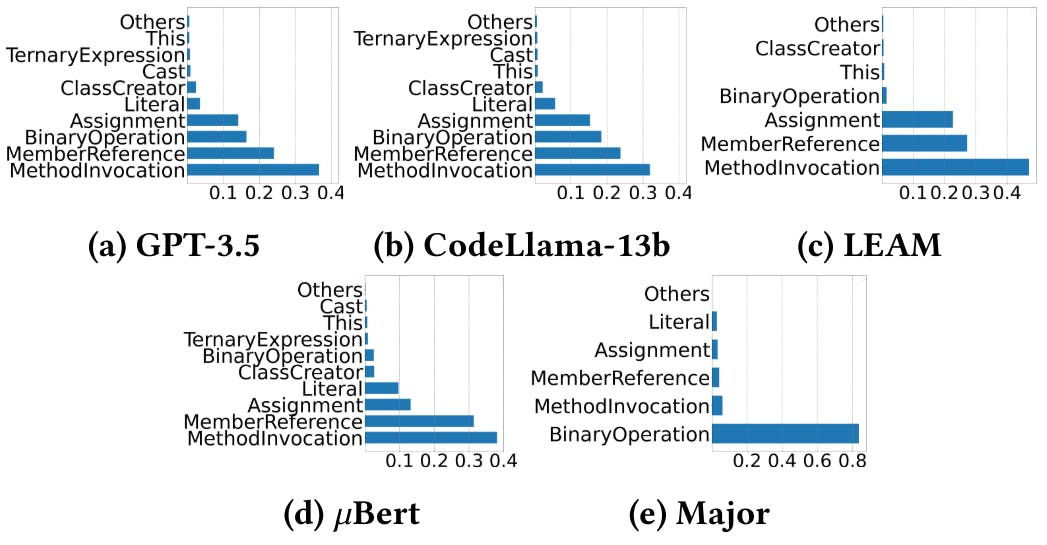

Evaluating GPT and Open-Source Models on Code Mutation Tasks

3 Jun 2025

Study compares GPT-4, GPT-3.5, and open-source LLMs on code mutation performance and analyzes root causes of non-compilable errors.

How Prompt Complexity Affects GPT-3.5 Mutation Generation Accuracy

3 Jun 2025

GPT-3.5 mutation generation outperforms CodeLlama and Major in detecting real bugs, coupling rates, and semantic similarity on Defects4J and ConDefects.

Comparing Costs, Usability and Results Diversity of Mutation Testing Techniques

3 Jun 2025

Evaluating mutation testing methods on cloud GPUs: comparing cost, mutation quality, usability, and diversity between LLMs and traditional approaches.

Using LLMs to Mutate Java Code

3 Jun 2025

Explore how large language models generate and filter Java code mutations using prompt design and compare open-source and closed-source LLMs.

We Designed a Study to See If AI Can Imitate Real Software Bugs

3 Jun 2025

We outline our study design for evaluating LLMs in code mutation testing using real-world Java bug datasets and targeted research questions.

Mutation Testing with GPT and CodeLlama

3 Jun 2025

Explore how LLMs like GPT-4 and CodeLlama improve mutation testing in software engineering through smarter, more useful code mutations.